Welcome to my newsletter, which I call Drop-In Class because each edition is like a short, fun Peloton class for technology concepts. Except unlike a fitness instructor, I'm not an expert yet: I'm learning everything at the same time you are. Thanks for following along with me as I "learn in public"!

Why real-time data streaming matters right now

Imagine Ferris Bueller’s Day Off taking place in 2024. When Ferris uses Google Maps to get downtown, how does Google Maps know to avoid the parade traffic conditions? When he hops in an Uber (after dropping the Ferrari in the garage), how does Uber calculate surge pricing? When Sloane places a live bet at the Cubs game, how is the sports betting app making sure it’s Sloane and not fraud? And when Cameron is being a sad sack taking a sick day, how does his Netflix account know what shows to recommend?

Life moves pretty fast, as Ferris says in the movie. And today it moves even faster than it did in the 80’s, with the modern applications we use today, crunching through loads of data in real time.

So let’s learn a bit about real-time data streaming, also called event streaming. Today’s music is from the Ferris soundtrack. Ohh yeahhhh.

Event streaming: If you have to stop and analyze it, it’s already too late

Here’s how traditional data analytics goes: You collect data, store it, and then analyze it all at once, maybe once a day or weekly. You’re answering questions like, “How is this marketing campaign performing?” This is called batch processing. You’re dealing with the data in batches. One big chunk at a time.

But life doesn’t happen in batches. Life happens in events.

For things like fraud detection in Sloane’s sports betting app, the analysis has to happen RIGHT NOW with the data reflecting THIS EXACT MOMENT, with no human involved. When someone else uses your credit card to place a bet, you don’t want the app to wait a week to see if it was fraud. It needs to be caught right when it happens.

That’s when we use event streaming, also referred to as event-driven or real-time data streaming.

Let’s break that down real quick:

Event: An “event” is any time something generates data: You click on a pair of shoes you’ve been thinking about buying. You add it to your cart. You place the order.

Stream: Instead of batches, the data arrives in a never-ending flow in real time. No start or end to it.

Processing: Right when it’s generated, data is processed and acted on. The moment you click on a shoe, it’s already updated some algorithm and you start seeing that shoe appear in your social media ads and your nightmares until you buy it.

You use event streaming when you need to react to what happened, the second it happened. You aren’t just collecting it in real time — you’re analyzing it in real time and taking action. Well, not literally you. There’s not enough time for that.

How do we process data that’s moving pretty fast?

Apache Kafka. Yep, another Apache! (See last newsletter about Apache Iceberg).

Apache Kafka is an open-source event streaming system originally built at LinkedIn to handle real-time data. You may have heard of Confluent, which was founded by the Apache Kafka creators.

As with anything in data, there are alternative solutions, but Apache Kafka is so widely used (80% of the Fortune 100, according to Confluent) it’s important to know about.

Basically Kafka makes it possible to handle large amounts of real-time information at once without the system slowing down or collapsing. Kafka collects the data, categorizes it into topics (like labels for the data), and divides it up into partitions to spread the workload out. The data gets saved to multiple brokers (servers) so if one broker goes down, the data isn’t lost.

In other words, Kafka does what you’re supposed to do with a big problem: It breaks it down into smaller pieces.

Batch processing vs. event streaming: Why not both?

I just went on about why event streaming is so important, but in many cases you need to supplement your real-time information with historic information.

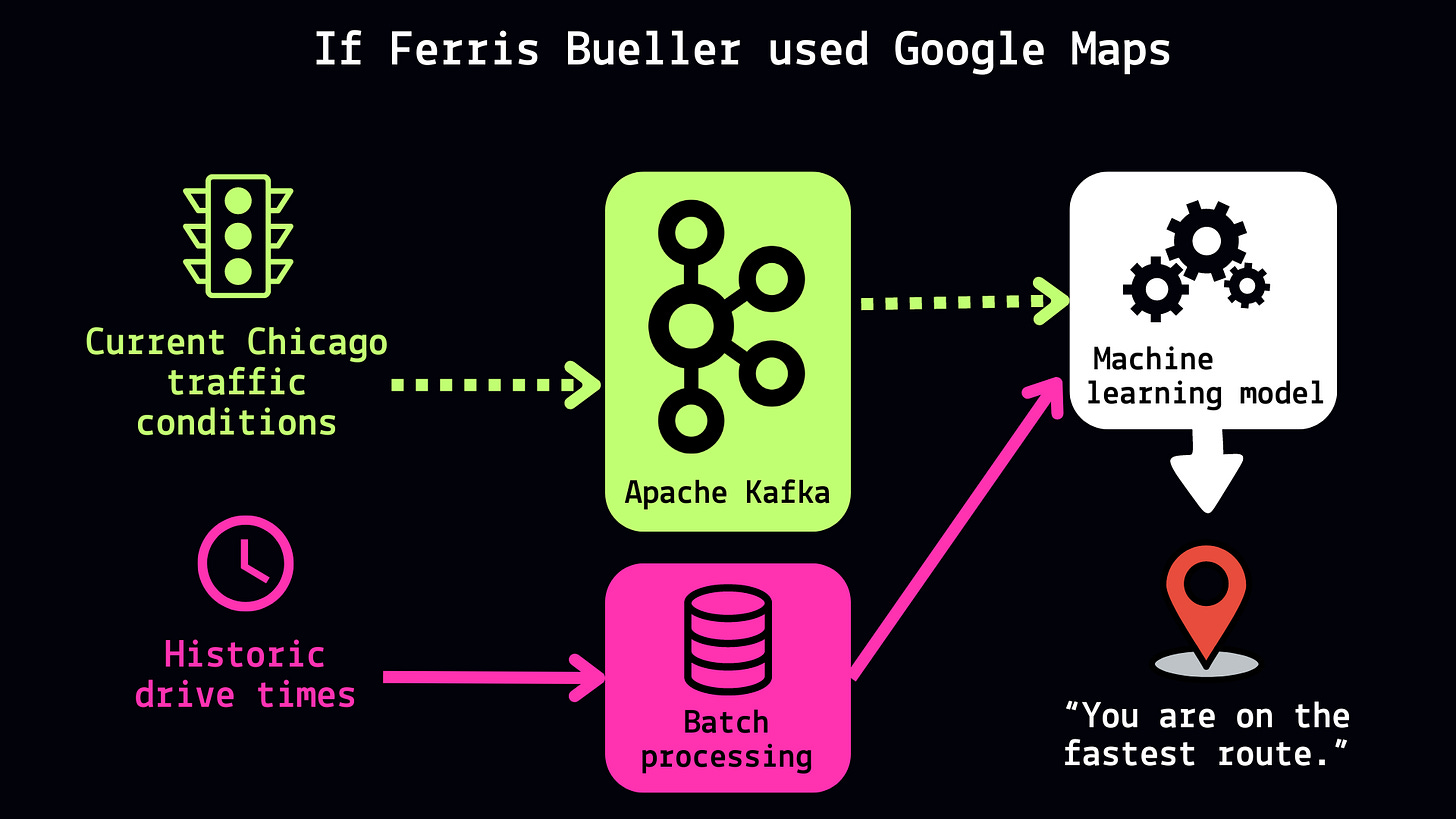

For instance, when Google Maps finds your route and estimates drive time, it pulls from both current, real-time traffic conditions and also from past information to predict the best route.

Life moves pretty fast. But to complete Ferris’ quote, you have to stop and look around once in a while.

Today’s apps react to life as it happens in real time, but they also stop to process what’s already happened in the past. Both real-time processing and batch processing are important! Kind of like living in the moment but also self-reflecting. Did this just get philosophical? That’s how I know I’m really starting to act like a Peloton instructor.

Extra credit reading

This is a 101-level drop-in, so I keep things at a high level! For deeper reading, check out these great articles.

5 Real-Time Data Processing and Analytics Technologies – And Where You Can Implement Them (Seattle Data Guy)

Apache Kafka Benefits and Use Cases (Confluent)

See you in the next Drop-In!

Cheers,

Alex